Results from Main web retrieved at 23:05 (GMT)

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- AlienBSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. The menu-bar hides automatically if off.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

BATCH System

* Software Batch system: Torque 4.2.10 (home made) and Maui 3.3.2 (home made) 2014: * Sowtware Torque 4.1.4 (home made)Maui 3.3.2 (home made) -- TWikiAdminUser - 2014-08-12

%TEXT%

Computing Farm

Hardware: The Computing farm T1_RU_JINR includes: * 5 baskets Processor blade Supermicro.I. Computing Element Accounting farm. * 5 baskets Processor blade Supermicro.100 machines: 2xCPU (Xeon X5675 @ 3.07GHz); 48GB RAM; 2x1000GB SATA-II; 2x1GbE. * Total : 1200 cores / slots in the batch. -- TWikiAdminUser - 2014-08-12

Computing Service

LIT Farm 2019: By 2019 a grid-infrastructure of the JINR site Tier1 includes: Worker Nodes (WN) : Typically SuperMicro Blade 415 64-bit machines: 2 x CPU, 6-12 core/CPU Total: 132813 HEP-kSI2k Total: 9200 core for batch 2018: * Worker Nodes (WN) Typically SuperMicro Blade

331 64-bit machines: 2 x CPU, 6-10 core/CPU

Total: 6512 core/slots for batch

Typically SuperMicro Blade

331 64-bit machines: 2 x CPU, 6-10 core/CPU

Total: 6512 core/slots for batch

| host | model | nCPU CPU | MHz | L2+L3 | cache RAM | Main board | HEP-SPEC06 | per-Core |

|---|

| wna099 | Supermicro Pr. Blade | 12 Xeon X5675 | 3066 | 3072+24576 | 48 | Supermicro B8DTT | 169.16 | 14.10 |

| wna100 | Supermicro Pr. Blade | 20 E5-2680v 2 | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 316.27 | 15.81 |

| wna120 | Supermicro Pr. Blade | 20 E5-2680v2, | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 315.73 | 15.79 |

| wna140 | Supermicro Pr. Blade | 20 E5-2680v2, | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 316.18 | 15.80 |

| wna160 | Supermicro Pr. Blade | 20 E5-2680v2, | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 316.35 | 15.81 |

| wna180 | Supermicro Pr. Blade | 20 E5-2680v2, | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 323.31 | 16.17 |

| wna200 | Supermicro Pr. Blade | 20 E5-2680v2, | 2800 | 2560+25600 | 64 | Supermicro B9DRT | 324.09 | 16.20 |

| wna221 | Supermicro Mc, Blade | 20 E5-2640v4, | 2400 | 2560+25600 | 128 | Supermicro B1DRi | 309.19 | 15.46 |

| wna248 | Supermicro Mc, Blade | 20 E5-2640v4, | 2400 | 2560+25600 | 128 | Supermicro B1DRi | 309.19 | 15.46 |

| wna276 | Supermicro Mc, Blade | 32 E5-2683v4, | 2100 | 4096+40960 | 128 | Supermicro B1DRi | 429.24 | 13.41 |

| wna304 | Supermicro Mc, Blade | 32 E5-2683v4, | 2100 | 4096+40960 | 128 | Supermicro B1DRi | 431.88 | 13.50 |

Sum 2018Q4:

| 100 x wna0XX = 1200 x 14.11 = 16920 HS06 = | 16.92 kHS06 |

|---|---|

| 60 x wna1XX = 1200 x 15.80 = 18960 HS06 = | 18.96 kHS06 |

| 60 x wna160 = 1200 x 16.06 = 19275 HS06 = | 19.27 kHS06 |

| 28 x wna221 = 560 x 15.46 = 8579 HS06 = | 8.58 kHS06 |

| 28 x wna248 = 560 x 15.46 = 8579 HS06 = | 8.58 kHS06 |

| 28 x wna276 = 896 x 13.41 = 12019 HS06 = | 12.02 kHS06 |

| 28 x wna304 = 896 x 13.50 = 12096 HS06 = | 12.09 kHS06 |

Total: 96428 HS06 = 96.43 kHS06 (wna000-wna331) Total: 24107 HEP-kSI2k Total: 6512 cores

Average HEP-SPEC06 per Core = 14.81 (wna000-wna331)

* Software

- OS: Scientific Linux release 6 x86_64.

- GCC: gcc (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3)

- C++: g++ (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3)

- FC: GNU Fortran (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3), *FLAGS: -O2 -pthread -fPIC -m32

- SPECall_cpp2006 with 32-bit binaries

- SPEC2006 version 1.1

- BATCH : Torque 4.2.10 (home made)

- Maui 3.3.2 (home made)

- CMS Phedex

- dCache-3.2

- Enstore 4.2.2 for tape robot.

- 100 64-bit machines: 2 x CPU (Xeon X5675 @ 3.07GHz, 6 cores per processor); 48GB RAM, 2x1000GB SATA-II; 2x1GbE.

- 148 64-bit machines: 2 x CPU (Xeon E5-2680 v2 @ 2.80GHz, 10 cores per processor), 64GB RAM; 2x1000GB SATA-II; 2x1GbE.

- OS: Scientific Linux release 6 x86_64

- BATCH : Torque 4.2.10 (home made)

- Maui 3.3.2 (home made)

- CMS Phedex

- 100 64-bit machines: 2 x CPU (Xeon X5675 @ 3.07GHz, 6 cores per processor); 48GB RAM, 2x1000GB SATA-II; 2x1GbE.

- 120 64-bit machines: 2 x CPU (Xeon E5-2680 v2 @ 2.80GHz, 10 cores per processor), 64GB RAM; 2x1000GB SATA-II; 2x1GbE.

- OS: Scientific Linux release 6 x86_64.

- BATCH : Torque 4.1.4 (home made)

- Maui 3.3.2 (home made)

- CMS Phedex

- 5 baskets Processor blade Supermicro.I. Computing Element Accounting farm.

- 5 baskets Processor blade Supermicro.

- 100 machines: 2xCPU (Xeon X5675 @ 3.07GHz); 48GB RAM; 2x1000GB SATA-II; 2x1GbE.

- OS : Scientific Linux release 6 x86_64,

Scientific Linux release 5 x86_64 - Phedex

- BATCH : Torque 4.1.4 (home made)

- Maui 3.3.2 (home made)

- PheDex

Computing Farm

2019: * Hardware Worker Node (WN): Typically SuperMicro Blade: 415 64-bit machines: 2 x CPU, 6-12 core/CPU Total: 132813 HEP-kSI2k Total: 9200 core for batch 2018: * Hardware Worker Node (WN): Typically SuperMicro Blade * 275 64-bit machines: 2 x CPU, 6-10 core/CPU Total: 4720 core/slots for batch 2017: * Hardware Worker Node (WN): Typically SuperMicro Blade- 100 64-bit machines: 2 x CPU (Xeon X5675 @ 3.07GHz, 6 cores per processor); 48GB RAM, 2x1000GB SATA-II; 2x1GbE.

- 148 64-bit machines: 2 x CPU (Xeon E5-2680 v2 @ 2.80GHz, 10 cores per processor), 64GB RAM; 2x1000GB SATA-II; 2x1GbE.

- 100 64-bit machines: 2 x CPU (Xeon X5675 @ 3.07GHz, 6 cores per processor); 48GB RAM, 2x1000GB SATA-II; 2x1GbE.

- 120 64-bit machines: 2 x CPU (Xeon E5-2680 v2 @ 2.80GHz, 10 cores per processor), 64GB RAM; 2x1000GB SATA-II; 2x1GbE.

Total: 3600 core/slots for batch.

- 5 crates Processor blade Supermicro.I. Computing Element Accounting farm.

- 5 crates Processor blade Supermicro. 100 machines: 2xCPU (Xeon X5675 @ 3.07GHz); 48GB RAM; 2x1000GB SATA-II; 2x1GbE.

Computing Service

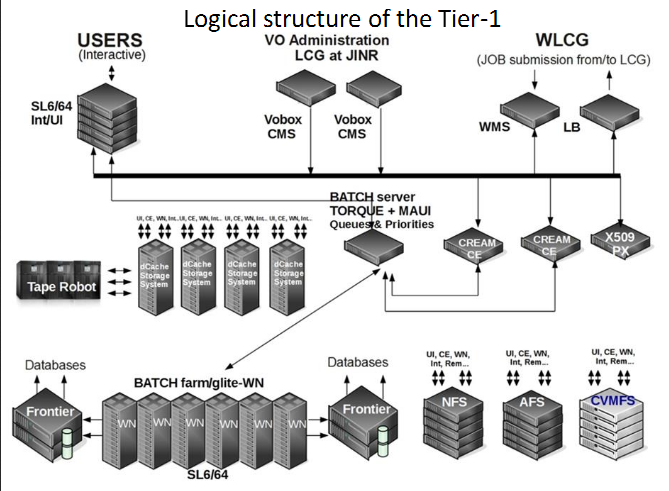

2019: JINR Tier1 computing service is presented:

JINR Tier1 computing service is presented:

- interactive cluster (five 64-bit machines)

- computing farm:

- cvmfs

- Software:

* GCC: gcc (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3)

* C++: g++ (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3)

* FC: GNU Fortran (GCC) 4.4.7 20120313 (Red Hat 4.4.7-3), FLAGS: -O2 -pthread -fPIC -m32

* SPECall_cpp2006 with 32-bit binaries

* SPEC2006 version 1.1

* BATCH : Torque 4.2.10 (home made)

* Maui 3.3.2 (home made)

* CMS Phedex

* dCache-3.2

* Enstore 4.2.2 for tape robot. * WLCG (2xCREAM, 4xARGUS, BDII top, BDII site, APEL parsers, APEL publisher, EMI-UI, 220xEMI-WN + gLExec-wn, 4xFTS3, LFC, WMS, L&B, glite-proxyrenewal) * cvmfs * FTS The Torque 4.2.10/Maui 3.3.2 software (custom build) is used as a resource manager and task scheduler. The PhEDEx software is used as a tool for management of CMS data placement. The standard WLCG program stack is used for data processing. FTS - File Transfer System is used for data transfer . -- TWikiAdminUser - 2019-02-28

ConfigCMSAtGit

Embedding actual CMS config into git : {{{########## T1_RU_JINR ##########

cd

mkdir t

cd t

rm -rf siteconf

git clone https://:@git.cern.ch/kerberos/siteconf

cd siteconf

rsync -aH --delete --exclude="*-[0-9][0-9]" --exclude=CVS/ --delete-excluded \

--out-format=%f%L \

vvm@ui01.jinr-t1.ru:/opt/exp_soft/cms/SITECONF/local/JobConfig/ \

T1_RU_JINR/JobConfig

----------------------

rsync -aH --delete --exclude="*-[0-9][0-9]" --exclude CVS/ --delete-excluded \

--out-format=%f%L \

vvm@ui01.jinr-t1.ru:/opt/exp_soft/cms/SITECONF/local/PhEDEx/ \

T1_RU_JINR/PhEDEx

----------------------

git add T1_RU_JINR/PhEDEx/*

git add T1_RU_JINR/JobConfig/*

git commit -m "Latest configs"

git show

git push origin master

########## T2_RU_JINR ##########

cd

mkdir t

cd t

rm -rf siteconf

git clone https://:@git.cern.ch/kerberos/siteconf

cd siteconf

rsync -aH --delete --exclude="*-[0-9][0-9]" --exclude=CVS/ --delete-excluded \

--out-format=%f%L \

vvm@lxpub.jinr.ru:/opt/exp_soft/cms/SITECONF/local/JobConfig/ \

T2_RU_JINR/JobConfig

----------------------

rsync -aH --delete --exclude="*-[0-9][0-9]" --exclude=CVS/ --delete-excluded \

--out-format=%f%L \

vvm@lxpub.jinr.ru:/opt/exp_soft/cms/SITECONF/local/PhEDEx/ \

T2_RU_JINR/PhEDEx

----------------------

git add T2_RU_JINR/PhEDEx/*

git add T2_RU_JINR/JobConfig/*

git commit -m "Latest configs"

git show

git push origin master

}}} -- TWikiAdminUser - 2014-08-12

Dashboard

The Dashboard project for LHC experiments (http://dashboard.cern.ch) aims to provide a single entry point to the monitoring data collected from the distributed computing systems of the LHC virtual organizations. The Dashboard system is supported and developed in the CERN IT. SAM3 is another version of monitoring the reliability and availability of grid-sites and grid-services for CMS , ATLAS,lhcb , alice (more info SAM3 ).Dcap Client DCACHE_IO_TUNNEL

Hi,

выяснилось, что теперь переменная DCACHE_IO_TUNNEL совсем

не работает, и даже совсем не нужна.

Работает:

LD_PRELOAD=libpdcap.so /bin/ls -l gssdcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/v/vmiТо есть, надо в URL указывать тонель: gssdcap .

Это конечно ошибка, так как такие изменения не должны

быть в minor релизе продукта. И в sources я вижу, что

переменная DCACHE_IO_TUNNEL проверяется.

Можно написать в dcache.org и потребовать восстановить

схему с DCACHE_IO_TUNNEL, но придется ждать.

Или всем пользователям поменять в их скриптах

dcap на gssdcap. Определение для DCACHE_IO_TUNNEL

можно оставить, оно ни на что не влияет.

Best regards, Valery Mitsyn 2015-04-17 -- TWikiAdminUser - 2015-04-17

Events

* The 8th International Conference "Distributed Computing and Grid-technologies in Science and Education" (GRID'2018) * 26th Symposium on Nuclear Electronics and Computing - NEC'2017 * The 7th International Conference "Distributed Computing and Grid-technologies in Science and Education" (GRID'2016) * XXV Symposium on Nuclear Electronics and Computing - NEC'2015 * The 6th International Conference "Distributed Computing and Grid-technologies in Science and Education" (GRID'2014) * XXIV International Symposium on Nuclear Electronics & Computing- NEC'2013Monitoring FTS

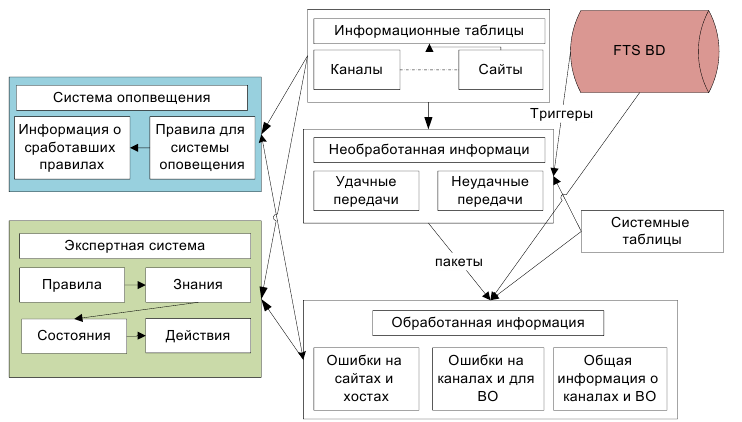

In frames of the JINR-CERN cooperation, a full-function monitoring system of FTS service has been developed. After a detailed analysis of a file transfer service database, the model of the data of the system of monitoring, giving convenient base for creation of various reports has been designed. In the course of designing four basic groups of users have been defined.Users:

* Managers of virtual organizations,

* Administrators of grid sites,

* Top management,

* FTS service administrators . System data model.

Phedex

PhEDEx CMS Data Transfer. The PhEDEx project provides the data placement and the file transfer system for the CMS experiment. The project name is short for “Physics Experiment Data Export. The PhEDEx components are:- Transfer management database (TMDB), currently version is 2.

- Transfer agents that manage the movement of files between sites. This also includes agents to migrate files to mass storage, to manage local mass storage stager pools and stage in files efficiently based on transfer demand, and to calculate file checksums when necessary before transfers.

- Management agents, in particular the allocator agent which assigns files to destinations based on site data subscriptions, and agents to maintains file transfer topology routing information.

- Tools to manage transfer requests; CMS/RefDB/PubDB specific.

- Local agents for managing files locally, for instance as files arrive from a transfer request or a production farm, including any processing that needs to be done before they can be made available for transfer: massaging information, merging files, registering files into the catalogues, injecting into TMDB.

- Web monitoring tools.

GridTeam

Belov Sergey bsd@cv.jinr.ru Kutovsky Nikolay kut@jinr.ru Mitsin Valery vvm@mammoth.jinr.ru -- TWikiAdminUser - 2014-08-12DCache server at any time: http://se-hd02-mss.jinr-t1.ru/ -- TWikiAdminUser - 2017-07-17

Managment Korenkov V.V korenkov@lxmx00.jinr.ru Strizh T.A. strizh@jinr.ru 1111111

Welcome to TWiki at CERN.

%DASHBOARD{ section="dashboard_start" }% %DASHBOARD{ section="box_start" title="Welcome to TWiki" }% TWiki® is a flexible, powerful, secure, yet simple Enterprise Wiki and Web Application Platform. Use TWiki for team collaboration, project management, document management, as a knowledge base and more on an intranet or on the Internet. Learn more.- icon:help TWiki.WelcomeGuest

- icon:help TWiki.TWikiTutorial

- icon:help Editing.reference

- icon:help TWiki.UserDocumentationCategory

- icon:help Access Control

- icon:help TWiki Q&A

- icon:wip Sandbox - give it a try

- icon:plug Plugins - checkout the possible extensions

| Alice | Atlas | CMS | LHCb |

- icon:index List of public webs

- icon:question Request a new web

| Online collaboration platform | Project development space | Document management system | |||

|---|---|---|---|---|---|

|

Distributed teams work together seamlessly and productively! |  |

Track actions, assign tasks and create automated reports! |  |

Version controlled document repository plus powerful and flexible wiki functionality under one roof! |

| Knowledge base | Platform to create web applications | Replacement for an existing Intranet | |||

|

TWiki is an organizational brain that brings tribal knowledge online, all neatly organized and categorized! |  |

Blogs, RSS feeds and news feeds, forums, calendar applications and tons of other solutions to create real value for your users! |  |

Eliminate the one-webmaster syndrome of outdated intranet content! |

Monitoring

- Monitoring At CMS CERN

- Monitoring At CMS JINR

- T1_RU_JINR at LHCOPN graf

- Local Monitoring At CMS dCache

- Dashboard

- FTS

- Fedex

Monitoring At CMS CERN Dashboard

T1_RU_JINR at CERN Dashboard CERN Dashboard -- TWikiAdminUser - 2014-08-14

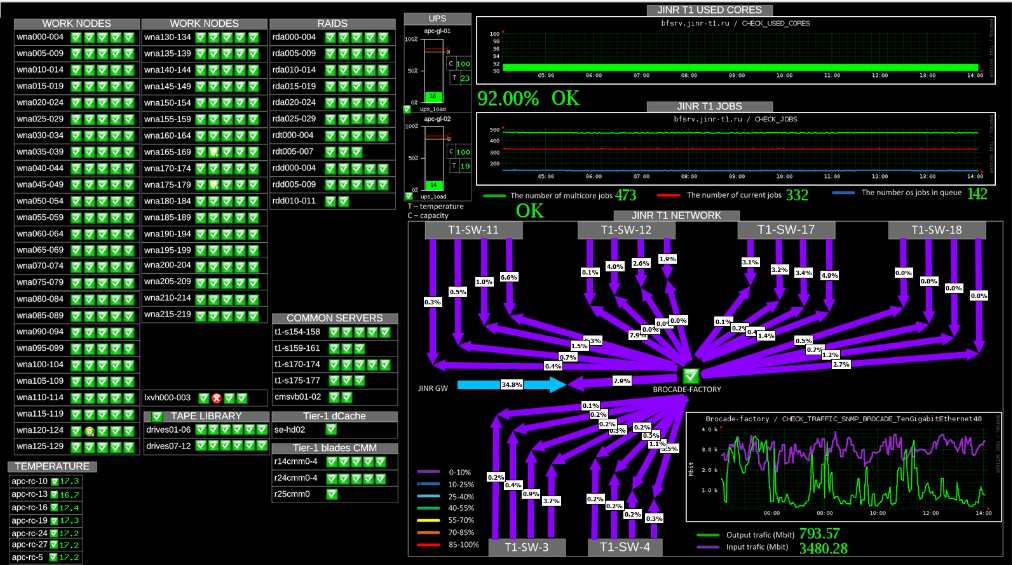

JINR Tier1 Monitoring

|

|

T1_RU_JINR at JINR :

- Monitoring the states of all nodes and services-from the supply system to the robotized tape library

- Global real time survey of the state of the whole computing complex

- In case of emergency, alerts are sent to habilitated persons via e-mail, SMS, etc.

- ~690 elements are under observation, ~ 3500 checks in real time

Phedex - CMS Data Transfers

Monstr - MONitoring Services of TieR-1(Monstr) at JINR (under development

Login:visitor

Passwd:visitor

Dashboard

http://dashb-ssb.cern.ch/dashboard/request.py/sitehistory?site=T1_RU_JINR#currentView=default -- TWikiAdminUser - 2014-08-12TWikiGroups » MonitoringT1Group

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiAdminUser, AlexGolunov, IvanKashunin, IgorPelevanyuk, IvanKadochnikov

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = MonitoringT1Group

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- NatalieGSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. The menu-bar hides automatically if off.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

TWikiGroups » NetWorkGroup

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiAdminUser, AndreyDolbilov, AndreyBaginyan

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = NetWorkGroup

Networking

One of the most important components of JINR and MICC providing access to resources and the possibility to work with the big data is a network infrastructure. High-speed reliable network infrastructure with a dedicated reserved data link to CERN ( LHCOPN). LHCOPN composed of many 10 Gbps links interconnecting the Tier-0 centre at CERN with the Tier-1 sites fulfilled its mission of providing stable high-capacity connectivity The network architecture of the Tier-1 at JINR was constructed with a double route between the access level and the server level

Each server have access to the network segment by two equivalent 10Gbps links, with a total throughput of 20 Gbps.

The connection between the access level and distribution level has four 40 Gbps routes, which, consequently, allow data transmission 160 Gbps, the oversubscription being 1:3.

The Tier-1 network segment is implemented on the Brocade VDX 6740 capable of data communication with more than 230 10-Gigabit Ethernet ports and 40 Gigabit Ethernet ports.

The series comprises models with optical and copper 10 Gbps ports and 40 Gbps uplinks

2019:

* Hardware

The network architecture of the Tier-1 at JINR was constructed with a double route between the access level and the server level

Each server have access to the network segment by two equivalent 10Gbps links, with a total throughput of 20 Gbps.

The connection between the access level and distribution level has four 40 Gbps routes, which, consequently, allow data transmission 160 Gbps, the oversubscription being 1:3.

The Tier-1 network segment is implemented on the Brocade VDX 6740 capable of data communication with more than 230 10-Gigabit Ethernet ports and 40 Gigabit Ethernet ports.

The series comprises models with optical and copper 10 Gbps ports and 40 Gbps uplinks

2019:

* Hardware

- Local Area Nertwork (LAN) 2x10Gbps, planned upgrade to 100Gbps

- Wide Area Network (WAN) 100Gbps, 2x10Gbps, upgrade WAN to nx100Gbps planned

- Local Area Nertwork (LAN) 10Gbps, planned upgrade to 100Gbps

- Wide Area Network (WAN) 100Gbps, 2x10Gbps, upgrade WAN to 2x100Gbps planned

- 10xSBM-GEM-X2C+ in top-on-the-rack 5 Processor blade.

- 4xProcurve 3500yl-24G at disk and infrastracture servers.

- Procurve 5406zl - backbone.

- 2x1GbE TRUNK between machinies and SBM-GEM-X2C+ and Procurve 3500yl-24G.

- 1x10G beetween SBM-GEM-X2C+/Procurve 3500yl-24G и Procurve 5406zl.

- 1x10G beetweenProcurve 5406zl and Border Gateway JINR.

- 2x10G beetween Border Gateway JINR and IX

TWikiGroups » Nobody Group

- Member list:

- Set GROUP =

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = TWikiAdminGroup

TWikiGroups » OperatorGroup

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiAdminGroup, AlexGolunov, IvanKashunin,TatianaStrizh, IvanKadochnikov, IgorPelevanyuk,IlyaAlatartsev

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = OperatorGroup

- Transfer management database (TMDB), currently version is 2.

- Transfer agents that manage the movement of files between sites. This also includes agents to migrate files to mass storage, to manage local mass storage stager pools and stage in files efficiently based on transfer demand, and to calculate file checksums when necessary before transfers.

- Management agents, in particular the allocator agent which assigns files to destinations based on site data subscriptions, and agents to maintains file transfer topology routing information.

- Tools to manage transfer requests; CMS/RefDB/PubDB specific.

- Local agents for managing files locally, for instance as files arrive from a transfer request or a production farm, including any processing that needs to be done before they can be made available for transfer: massaging information, merging files, registering files into the catalogues, injecting into TMDB.

- Web monitoring tools.

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- SergeiShmatovSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. The menu-bar hides automatically if off.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

Servers Infrastructure

2019:

2019:

- Interactive cluster

- Computing farm for CMS:

- CVMFS The CVMFS is used to deploy large software packages of collaborations working in the WLCG.

- Storage Systems : dCache (11GB) , EOS (4PB)

2018: * Hardware Worker Node (WN): Typically SuperMicro Blade * 275 64-bit machines: 2 x CPU, 6-10 core/CPU Total: 4720 core/slots for batch

2017: * Hardware Worker Node (WN): Typically SuperMicro Blade

- 100 64-bit machines: 2 x CPU (Xeon X5675 @ 3.07GHz, 6 cores per processor); 48GB RAM, 2x1000GB SATA-II; 2x1GbE.

- 148 64-bit machines: 2 x CPU (Xeon E5-2680 v2 @ 2.80GHz, 10 cores per processor), 64GB RAM; 2x1000GB SATA-II; 2x1GbE.

2014: 17 machine : 2xCPU (Xeon X5650 @ 2.67GHz); 48GB RAM; 500GB SATA-II; 2x1GbE. 4 machines: 2 x CPU (Xeon X5650 @ 2.67GHz); 48GB RAM; 2x1000GBGB SATA-II; 2x1GbE

10 machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G TWikiAdminUser - 2014-08-12

TWikiGroups » ShortInstructions

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiAdminUser

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = ShortInstructions

Short User's Guide dCache

Особенности доступа к файлам

Файлы в dCache пишутся один раз, а читаются много раз.

Модификация файла непосредственно dCache не допускается, происходит следующим образом :

файл

1. копируется из dCache локально

2. редактируется

3. стирается из dCache

4. записывается из локального пространства в пространство dCache под старым именем.

Доступ к файлам возможен исключительно с интерактивной фермы (lxpub01-lxpub05.jinr.ru)

через протоколы dcap и утилиты копирования dccp.

Для операций не требующих записи можно использовать

lxse-dc01.jinr.ru:22125Для операций с записью (удалением файла) требуется

lxse-dc01.jinr.ru:22126Пример : Чтение-Запись-Удаление файла testfile пользователя trofimov

1. lxpub03:~ > LD_PRELOAD=/usr/lib64/libpdcap.so 2. lxpub03:~ > export DCACHE_IO_TUNNEL=/usr/lib64/dcap/libgssTunnel.so 3. lxpub03:~ > ls dcap://lxse-dc01.jinr.ru:22125//pnfs/jinr.ru/data/user/t/trofimov external iiii totest totest1 xxx xxx0022 xxx0044 xxx0045 xxx33 xxx3366 xxx3378 xxx3379 xxx345 xxx9988 4. lxpub03:~ > dccp testfile dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov 26317 bytes (25.7 kiB) in 0 seconds 5. lxpub03:~ >ls dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov/testfile -l -rw-r--r-- 0 trofimov lhep 26317 Feb 15 13:22 dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov/testfileЧитать файл можно и без возможности записи :

lxpub03:~ > export n DCACHE_IO_TUNNEL lxpub03:~ > LD_PRELOAD=/usr/lib64/libpdcap.so lxpub03:~ > unlink dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov/testfile lxpub03:~ > ls dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov/testfile /bin/ls: cannot access dcap://lxse-dc01.jinr.ru:22126//pnfs/jinr.ru/data/user/t/trofimov/testfile: No such file or directory -- TWikiAdminUser - 2015-02-20Storage Elements(SE)

Storage System dCache : 2018: * Hardware Storage System dCache: Typically Supermicro and DELL- 1st - Disk Only: 7.2 PB

- 2nd - support Mass Storage System: 1.1PB.

- IBM TS3500, 3440xLTO-6 data cartridges;

- 12xLTO-6 tape drives FC8, 9 PB

- OS: Scientific Linux release 6 x86_64.

- BATCH : Torque 4.2.10 (home made)

- Maui 3.3.2 (home made)

- CMS Phedex

- dCache-3.2 * Enstore 4.2.2 for tape robot.

- 31 disk servers: 2 x CPU (Xeon E5-2650 @ 2.00GHz); 128GB RAM; 63TB ZFS (24x3000GB NL SAS); 2x10G.

- 24 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G

- 4 disk servers: 2 x CPU (Xeon E5-2650 v4 @ 2.29GHz) 128GB RAM; 150TB ZFS (24x8000GB NLSAS), 2x10G

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 8 KVM (Kernel-based Virtual Machine) for access protocols support.

- 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC.

- 8 disk servers: 2 x CPU (E5-2640 v4 @ 2.40GHz); 128GB RAM; 70TB ZFS (16x6000GB NLSAS); 2x10G; Qlogic Dual 16Gb FC.

- 1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 11000TB.

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 6 KVM machines for access protocols support

- dCache-2.16

- Enstore 4.2.2 for tape robot.

12 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G.

Total space: 2.8PB

3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

8 KVM (Kernel-based Virtual Machine) for access protocols support. 2nd - support Mass Storage System: 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC. Total disk buffer space: 0.5PB.

1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 5400TB.

3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

6 KVM machines for access protocols support * Software dCache-2.10 Enstore 4.2.2 for tape robot. 2014: * Hardware 2 facilities SE (dCache) 1st - Disk Only: 3 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 2x1GbE; 48GB RAM 5 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 10GbE; 48GB RAM 3 control computes : 2xCPU (Xeon X5650 @ 2.67GHz; 48GB RAM; 500GB SATA-II; 2x1GbE. 8 computers support access protocols. All are on a virtual machine (KVM)

2nd - support Mass Storage System:

2 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 2x1GbE; 48GB RAM

1 tape robot: IBM TS3200, 24xLTO5; 4xUltrium5 FC8; 72TB.

3 control computers : 2xCPU (Xeon X5650 @ 2.67GHz; 48GB RAM; 500GB SATA-II; 2x1GbE.

6 computers support access protocols. All are on a virtual machine (KVM) * Software dcache-2.6.31-1 (dcache.org) dcache disk only

dcache-2.2.27 (dcache.org) dcache MSS

Storage Elements(SE)

2019:

Storage System: dCache

The storage system consists of disk arrays and long-term data storage on tapes and is supported by the dCache-3.2 and Enstore 4.2.2 software.

( hardware : Typically Supermicro and DELL)

2019:

Storage System: dCache

The storage system consists of disk arrays and long-term data storage on tapes and is supported by the dCache-3.2 and Enstore 4.2.2 software.

( hardware : Typically Supermicro and DELL)

- 1st - Disk Only: 8.3PB ( disk 7.2PB , buffer 1.2.PB )

- 1 tape robot: IBM TS3500, 3440xLTO-6 data cartridges; 12xLTO-6 tape drives FC8,12xLTO-6 data cartridges, 11 PB.

- CMS Phedex

- dCache-3.2

- Enstore 4.2.2 for tape robot.

- BATCH : Torque 4.2.10 (home made)/Maui 3.3.2 (home made)

- EOS aquamarine

- WLCG ( 2xCREAM, 4xARGUS, BDII top, BDII site, APEL parsers, APEL publisher, EMI-UI, 220xEMI-WN + gLExec-wn, 4xFTS3, LFC, WMS, L&B, glite-proxyrenewal)

2018: * Hardware Storage System dCache: Typically Supermicro and DELL

- 1st - Disk Only: 7.2 PB

- 2nd - support Mass Storage System: 1.1PB.

- IBM TS3500, 3440xLTO-6 data cartridges;

- 12xLTO-6 tape drives FC8, 9 PB

- OS: Scientific Linux release 6 x86_64.

- BATCH : Torque 4.2.10 (home made)

- Maui 3.3.2 (home made)

- CMS Phedex

- dCache-3.2 * Enstore 4.2.2 for tape robot.

2017: * Hardware Storage System dCache: 1st - Disk for ATLAS & CMS: Typically Supermicro and DELL

- 31 disk servers: 2 x CPU (Xeon E5-2650 @ 2.00GHz); 128GB RAM; 63TB ZFS (24x3000GB NL SAS); 2x10G.

- 24 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G

- 4 disk servers: 2 x CPU (Xeon E5-2650 v4 @ 2.29GHz) 128GB RAM; 150TB ZFS (24x8000GB NLSAS), 2x10G

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 8 KVM (Kernel-based Virtual Machine) for access protocols support.

- 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC.

- 8 disk servers: 2 x CPU (E5-2640 v4 @ 2.40GHz); 128GB RAM; 70TB ZFS (16x6000GB NLSAS); 2x10G; Qlogic Dual 16Gb FC.

- 1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 11000TB.

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 6 KVM machines for access protocols support

- dCache-2.16

- Enstore 4.2.2 for tape robot.

12 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G.

Total space: 2.8PB

3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

8 KVM (Kernel-based Virtual Machine) for access protocols support. 2nd - support Mass Storage System: 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC. Total disk buffer space: 0.5PB.

1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 5400TB.

3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

6 KVM machines for access protocols support * Software dCache-2.10 Enstore 4.2.2 for tape robot.

2014: * Hardware 2 facilities SE (dCache) 1st - Disk Only: 3 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 2x1GbE; 48GB RAM 5 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 10GbE; 48GB RAM 3 control computes : 2xCPU (Xeon X5650 @ 2.67GHz; 48GB RAM; 500GB SATA-II; 2x1GbE. 8 computers support access protocols. All are on a virtual machine (KVM)

2nd - support Mass Storage System:

2 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 2x1GbE; 48GB RAM

1 tape robot: IBM TS3200, 24xLTO5; 4xUltrium5 FC8; 72TB.

3 control computers : 2xCPU (Xeon X5650 @ 2.67GHz; 48GB RAM; 500GB SATA-II; 2x1GbE.

6 computers support access protocols. All are on a virtual machine (KVM) * Software dcache-2.6.31-1 (dcache.org) dcache disk only

dcache-2.2.27 (dcache.org) dcache MSS -- TWikiAdminUser - 2019-02-28

Storage Systems

2018:

* Hardware

Storage System : dCache

Typically Supermicro and DELL

2018:

* Hardware

Storage System : dCache

Typically Supermicro and DELL

- 1st - Disk Only: 7.2 PB

- 2nd - support Mass Storage System:*1.1PB*.

- IBM TS3500, 3440xLTO-6 data cartridges;

- 12xLTO-6 tape drives FC8, 9 PB

- 31 disk servers: 2 x CPU (Xeon E5-2650 @ 2.00GHz); 128GB RAM; 63TB ZFS (24x3000GB NL SAS); 2x10G.

- 24 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G

- 4 disk servers: 2 x CPU (Xeon E5-2650 v4 @ 2.29GHz) 128GB RAM; 150TB ZFS (24x8000GB NLSAS), 2x10G

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 8 KVM (Kernel-based Virtual Machine) for access protocols support.

- 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC.

- 8 disk servers: 2 x CPU (E5-2640 v4 @ 2.40GHz); 128GB RAM; 70TB ZFS (16x6000GB NLSAS); 2x10G; Qlogic Dual 16Gb FC.

- 1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 11000TB.

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 6 KVM machines for access protocols support

- 30 disk servers: 2 x CPU (Xeon E5-2650 @ 2.00GHz); 128GB RAM; 63TB h/w RAID6 (24x3000GB NL SAS); 2x10G.

- 12 disk servers: 2 x CPU (Xeon E5-2660 v3 @ 2.60GHz); 128GB RAM; 76TB ZFS (16x6000GB NL SAS); 2x10G.

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 8 KVM (Kernel-based Virtual Machine) for access protocols support.

- 8 disk servers: 2 x CPU (Xeon X5650 @2.67GHz); 96GB RAM; 63TB h/w RAID6 (24x3000GB SATAIII); 2x10G; Qlogic Dual 8Gb FC.

- 1 tape robot: IBM TS3500, 2000xLTO Ultrium-6 data cartridges; 12xLTO Ultrium-6 tape drives FC8; 5400TB.

- 3 head node machines: 2 x CPU (Xeon E5-2683 v3 @ 2.00GHz); 128GB RAM; 4x1000GB SAS h/w RAID10; 2x10G.

- 6 KVM machines for access protocols support

2nd - support Mass Storage System:

2 disk servers: 65906GB h/w RAID6 (24x3000GB SATAIII); 2x1GbE; 48GB RAM

1 tape robot: IBM TS3200, 24xLTO5; 4xUltrium5 FC8; 72TB.

3 control computers : 2xCPU (Xeon X5650 @ 2.67GHz; 48GB RAM; 500GB SATA-II; 2x1GbE.

6 computers support access protocols. All are on a virtual machine (KVM)

TWikiGroups » TWiki Administrator Group

This is a super user group that has access to all content, regardless of access control. This group has also access to the configure script to do system level configuration.- Member list (comma-separated list):

- Set GROUP = VictorZhiltsov, ValeryMitsyn

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = TWikiAdminGroup

How to login as TWikiAdminUser

- Login as the internal TWiki administrator:

- internal admin login (use the username suggested and the password set in configure).

- Logout from Administrator:

lib/LocalSite.cfg file and deleting the line that starts with $TWiki::cfg{Password} and then set it again by saving your settings in configure.

If you haven't previously set up an administrator, follow these steps:

- Authenticate as the internal TWiki administrator:

- internal admin login (use the username suggested and the password set in configure).

- Edit this topic

- Insert the wikinames of admin users in the TWiki Administrator Group by listing them in the GROUP setting

(example* Set GROUP = JohnSmith, JamesBond) - Save this topic

- Logout from the Internal TWikiAdminUser

- Verify that new members show up properly in the group listing at TWikiGroups

- Make sure always to keep this topic write protected by keeping the already defined ALLOWTOPICCHANGE setting

- The ALLOWTOPICHANGE and ALLOWTOPICRENAME settings in TWiki.TWikiPreferences and Main.TWikiPreferences have already been set to this group (TWikiAdminGroup), restricting edit of site-wide preferences to the TWiki Administrator Group

TWiki Administrator User

The TWikiAdminUser has been added to TWiki to make it possible to login without needing to create a TWiki User, or to temporarily login as TWikiAdminUser using the password set in configure, and then log back out to the same User and Group as before. This means it is no longer necessary to add yourself to the TWikiAdminGroup, and you will be able to quicky change to Admin User (and back to your user) only when you need to.How to login as TWikiAdminUser

- Login as the internal TWiki administrator:

- internal admin login (use the username suggested and the password set in configure).

- Logout from Administrator:

lib/LocalSite.cfg file and deleting the line that starts with $TWiki::cfg{Password} and then set it again by saving your settings in configure.

Prerequisites

-

Security Setup : Sessions : {UserClientSession}needs to be enabled in configure - A configure password (otherwise the Admin login is automatically disabled.)

- If your TWiki is configured to use ApacheLoginManager, you will need to log in as a valid user first.

TWiki Contributor

Not an actual user of this site, but a person devoting some of his/her time to contribute to the Open Source TWiki project. TWikiContributor lists the people involved. Related topics: TWikiUsers, TWikiRegistrationTWikiGroups »

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiGuest

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE =

TWiki Groups

Use these groups to define fine grained access control in TWiki: WikiCulture. Experience shows that unrestricted write access works very well because:- There is enough peer pressure to post only appropriate content.

- Content does not get lost because topics are under revision control.

- A topic can be rolled back to a previous version.

The TWikiGuest User

A guest of this TWiki site, or a user who is not logged in.- Welcome Guest - look here first

- Tutorial - 20 minutes TWiki tutorial

- User's Guide - documentation for TWiki users

- Frequently Asked Questions - about TWiki

- Reference Manual - documentation for system administrators

- Site Map - to navigate to content

Local customisations of site-wide preferences

Site Specific Site-wide Preferences

Final Preferences

-

FINALPREFERENCESlocks site-level preferences that are not allowed to be overridden by WebPreferences or user preferences:- Set FINALPREFERENCES = ATTACHFILESIZELIMIT, PREVIEWBGIMAGE, WIKITOOLNAME, WIKIHOMEURL, ALLOWROOTCHANGE, DENYROOTCHANGE, TWIKILAYOUTURL, TWIKISTYLEURL, TWIKICOLORSURL, USERSWEB, SYSTEMWEB, DOCWEB

Security Settings

- Only TWiki administrators are allowed to change this topic:

- Set ALLOWTOPICCHANGE = TWikiAdminGroup

- Set ALLOWTOPICRENAME = TWikiAdminGroup

- Disable WYSIWYG editor for this preferences topic only:

- Local TINYMCEPLUGIN_DISABLE = on

The TWikiRegistrationAgent User

This is a TWiki user used by TWiki when it registers new users. This user has special access to write to TWikiUsers, and does not have an entry in the password system. Related topics: TWikiUsers, TWikiRegistrationList of TWiki Users

Below is a list of users with accounts. If you want to edit topics or see protected areas of the site then you can get added to the list by registering: fill out the form in TWikiRegistration. If you forget your password, ResetPassword will get a new one sent to you. Related topics: TWikiGroups, UserList, UserListByDateJoined, UserListByLocationA B C D E F G H I J K L M N O P Q R S T U V W X Y Z

- A - - - - -

- AlexGolunov - AlexGolunov - 2015-04-09

- AndreaniMonica - AndreaniMonica - 2020-09-03

- AndreyBaginyan - AndreyBaginyan - 2015-07-07

- AndreyDolbilov - AndreyDolbilov - 2015-07-07

- AwadRajpoot - AwadRajpoot - 2022-02-09

- B - - - - -

- BenerNih - BenerNih - 2018-03-25

- BiliPont - BiliPont - 2017-06-23

- C - - - - -

- CartsBoxes - CartsBoxes - 2023-01-30

- CarymartRF - CarymartRF - 2018-03-24

- ChamCuu - ChamCuu - 2018-06-21

- CommandeRadio - CommandeRadio - 2018-05-05

- D - - - - -

- DmhTeam01 - DmhTeam01 - 2023-12-16

- DmhTeam02 - DmhTeam02 - 2023-12-16

- DolansFatih - DolansFatih - 2024-03-11

- E - - - - -

- F - - - - -

- G - - - - -

- GroNat - GroNat - 2015-04-09

- H - - - - -

- I - - - - -

- IgorPelevanyuk - IgorPelevanyuk - 2015-07-07

- IlyaAlatartsev - IlyaAlatartsev - 2019-02-28

- IsabelPt - IsabelPt - 2017-06-23

- IvanKadochnikov - IvanKadochnikov - 2015-07-07

- IvanKashunin - IvanKashunin - 2015-04-09

- IvanPupkov - IvanPupkov - 2015-09-15

- J - - - - -

- JasonSanders - JasonSanders - 2017-04-04

- JoshAdams - JoshAdams - 2017-10-09

- K - - - - -

- KuboPro - KuboPro - 2018-01-18

- L - - - - -

- LaetitiaMOREAU - LaetitiaMOREAU - 2017-06-27

- LoverbeautyChen - LoverbeautyChen - 2017-11-23

- M - - - - -

- MoleauPont - MoleauPont - 2017-06-23

- MonicaAndreani - MonicaAndreani - 2020-09-03

- N - - - - -

- NatalieG - NatalieG - 2014-05-17

- O - - - - -

- P - - - - -

- PaulineManceau - PaulineManceau - 2017-06-27

- Q - - - - -

- R - - - - -

- S - - - - -

- SaglikAdams - SaglikAdams - 2017-10-09

- SaglikBilgileri - SaglikBilgileri - 2017-10-09

- SakaCamprung - SakaCamprung - 2016-11-10

- SergeiShmatov - SergeiShmatov - 2014-06-02

- SitusPokerOnlineResmi - SitusPokerOnlineResmi - 2018-09-19

- T - - - - -

- TWikiContributor - 2005-01-01

- TWikiGuest - guest - 1999-02-10

- TWikiRegistrationAgent - 2005-01-01

- TatianaStrizh - TatianaStrizh - 2014-07-01

- TikhonenkoElena - TikhonenkoElena - 2014-08-14

- U - - - - -

- UmerBhatti - UmerBhatti - 2023-01-30

- UnknownUser - 2005-01-01

- UsmanBhatti - UsmanBhatti - 2023-01-30

- V - - - - -

- ValeryMitsyn - ValeryMitsyn - 2014-05-20

- VictorZhiltsov - VictorZhiltsov - 2014-05-19

- ViktorRiskov - ViktorRiskov - 2021-06-26

- VladimirTrofimov - VladimirTrofimov - 2014-05-21

- W - - - - -

- WaralabaAlatListrik - WaralabaAlatListrik - 2016-03-14

- X - - - - -

- Y - - - - -

- Z - - - - -

- TWikiContributor - placeholder for a TWiki developer, and is used in TWiki documentation

- TWikiGuest - guest user, used as a fallback if the user can't be identified

- TWikiRegistrationAgent - special user used during the new user registration process

- UnknownUser - used where the author of a previously stored piece of data can't be determined

- Set ALLOWTOPICCHANGE = TWikiAdminGroup, TWikiRegistrationAgent

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- TatianaStrizhSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. The menu-bar hides automatically if off.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- TikhonenkoElenaSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. The menu-bar hides automatically if off.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

Transactions

* 2018 The CMS Tier1 at JINR: five years of operations , Tatiana Strizh, GRID-2018 Tier-1 CMS at JINR: Status and Perspective 2018 , T.A. Strizh Development of JINR Tier-1 service monitoring system, I.S. Pelevanyuk, GRID-2018 JINRTier-1 service monitoring system: Ideas and Design, I.S. Kadochnikov, I.S. Pelevanyuk * 2017 JINR Grid Tier-1@Tier-2, Dr. Tatiana STRIZH ,NEC-2017 * 2016- Tier-1 CMS at JINR: Past, Present and Future,Tatiana STRIZH

- JINR Tier-1 service monitoring system: Ideas and Design, Igor PELEVANYUK

- PhEDEx - main component in data management system in the CMS experiment, Nikolay VOYTISHIN

- Status of the RDMS CMS Computing, Elena TIKHONENKO

- JINR Tier-1 centre for the CMS experiment at LHC

- The first Tier-1center in Russia was opened in Dubna

- JINR TIER-1-level computing system for the CMS experiment at LHC: status and perspectives, T.STRIZH,JINR

- JINR TIER-1-Level Computing System for the CMS Experiment at LHC: Status and Perspectives, Tatiana STRIZH, JINR

- RDMS CMS Computing: Current Status and Plans, Elena TIKHONENKO , JINR

- A highly reliable data center network topology Tier 1 at JINR, Mr. AndreyBAGINYAN , JINR

- Monitoring and services of CMS Remote Operational Center in JINR, Alexander GOLUNOV, JINR

- LHCOPN/LHCONE: first experiences in using them in RRC-KI ,Dr. Eygene RYABINKIN , NRC Kurchatov Institute

- Summary: Technical interchange meeting: Computing models, Software and Data Processing for the future HENP experiments , Prof. Markus SCHULZ,CERN IT

- Workload Management System for Big Data on Heterogeneous Distributed Computing Resources Danila OLEYNIK, JINR/UTA

- ÐпÑÑ Ð¾ÑганизаÑии и иÑполÑÐ·Ð¾Ð²Ð°Ð½Ð¸Ñ Ð´Ð¸Ñкового Ñ ÑанилиÑа под ÑпÑавлением EOS в ÐÐ ÐÐ-клаÑÑеÑе Tier-1 в ÐÐЦ "ÐÑÑÑаÑовÑкий инÑÑиÑÑÑ", Igor TKACHENKO NRC-KI

The UnknownUser User

UnknownUser is a reserved name in TWiki. If the UnknownUser appears, it is probably because author information for a topic could not be recovered, perhaps because a topic has been modified by a non-TWiki tool. Related topics: TWikiUsers, TWikiRegistrationUser List sorted by name

Related topics: TWikiGroups, TWikiUsers, UserListByDateJoined, UserListByLocation| Name | Contact | Department | Organization | Location | Country | |

|---|---|---|---|---|---|---|

|

|

|

||||

|

Natalie Gromova |

|

LIT | JINR | Dubna | Russia |

|

Sergei Shmatov |

|

JINR | Dubna | Russia | |

|

Tatiana Strizh |

|

JINR | Russia | ||

|

Tikhonenko Elena |

|

JINR | Russia | ||

|

Valery Mitsyn |

|

JINR | Russia | ||

|

Victor Zhiltsov |

|

LIT -216a | JINR | Dubna | Russia |

|

Vladimir Trofimov |

|

JINR | LIT | Russia |

User List sorted by date joined / updated

Related topics: TWikiGroups, TWikiUsers, UserList, UserListByLocation| Date joined |

Last updated | FirstName | LastName | Organization | Country |

|---|---|---|---|---|---|

| 2014-08-14 - 08:04 | 2014-08-14 - 08:04 | Tikhonenko | Elena | Russia | |

| 2014-07-01 - 19:39 | 2014-07-01 - 19:39 | Tatiana | Strizh | Russia | |

| 2014-06-02 - 11:21 | 2014-06-02 - 11:36 | Sergei | Shmatov | Russia | |

| 2014-05-26 - 17:14 | 2014-07-30 - 13:00 | [[AlienB][]] | [[AlienB][]] | ||

| 2014-05-21 - 12:40 | 2014-05-26 - 11:57 | Vladimir | Trofimov | Russia | |

| 2014-05-20 - 10:14 | 2014-05-20 - 13:02 | Valery | Mitsyn | Russia | |

| 2014-05-19 - 09:26 | 2014-06-02 - 11:04 | Victor | Zhiltsov | Russia | |

| 2014-05-17 - 17:05 | 2014-08-01 - 11:46 | Natalie | Gromova | Russia |

User List sorted by location

Related topics: TWikiGroups, TWikiUsers, UserList, UserListByDateJoined| Country |

State | FirstName | LastName | Organization |

|---|---|---|---|---|

| Russia | Natalie | Gromova | ||

| Russia | Tatiana | Strizh | ||

| Russia | Valery | Mitsyn | ||

| Russia | Vladimir | Trofimov | ||

| Russia | Sergei | Shmatov | ||

| Russia | Tikhonenko | Elena | ||

| Russia | Victor | Zhiltsov | ||

| [[AlienB][]] | [[AlienB][]] |

Header of User Profile Pages

Note: This is a maintenance topic, used by the TWiki Administrator. The part between the horizontal rules gets included at the top of every TWikiUsers profile page. The header can be customized to the needs of your organization. The TWiki:TWiki.UserHomepageSupplement has some additional documentation and ideas on customizing the user profile pages.- Set ALLOWTOPICCHANGE = TWikiAdminGroup

Short User's Guide dCache

* Short User's Guide dCache * dcap client & DCACHE_IO_TUNNEL -- TWikiAdminUser - 2015-04-17Ideas, requests, problems regarding TWiki? Send feedback

-

-